Showing

- doc/load.rst 45 additions, 0 deletionsdoc/load.rst

- doc/maintainers.rst 90 additions, 0 deletionsdoc/maintainers.rst

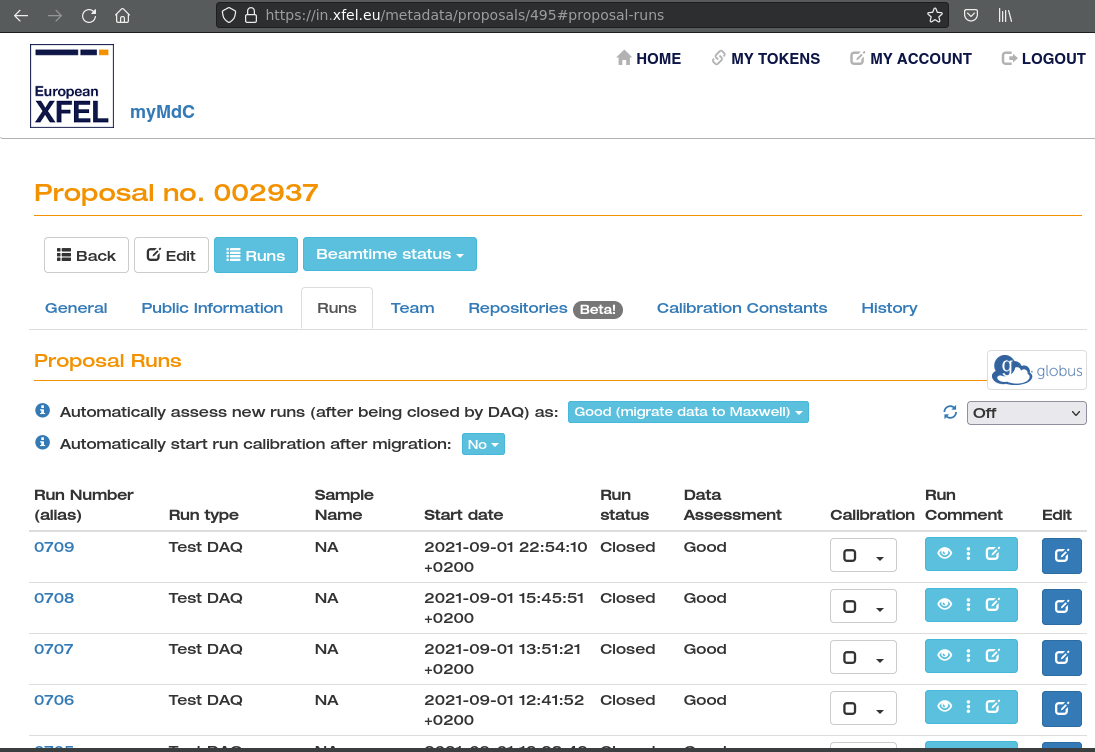

- doc/metadata.png 0 additions, 0 deletionsdoc/metadata.png

- doc/requirements.txt 6 additions, 0 deletionsdoc/requirements.txt

- doc/scripts/bin_dssc_module_job.sh 52 additions, 0 deletionsdoc/scripts/bin_dssc_module_job.sh

- doc/scripts/boz_parameters_job.sh 58 additions, 0 deletionsdoc/scripts/boz_parameters_job.sh

- doc/scripts/format_data.py 50 additions, 0 deletionsdoc/scripts/format_data.py

- doc/scripts/format_data.sh 19 additions, 0 deletionsdoc/scripts/format_data.sh

- doc/scripts/process_data_201007_23h.py 168 additions, 0 deletionsdoc/scripts/process_data_201007_23h.py

- doc/scripts/start_job_single.sh 16 additions, 0 deletionsdoc/scripts/start_job_single.sh

- doc/scripts/start_processing_all.sh 20 additions, 0 deletionsdoc/scripts/start_processing_all.sh

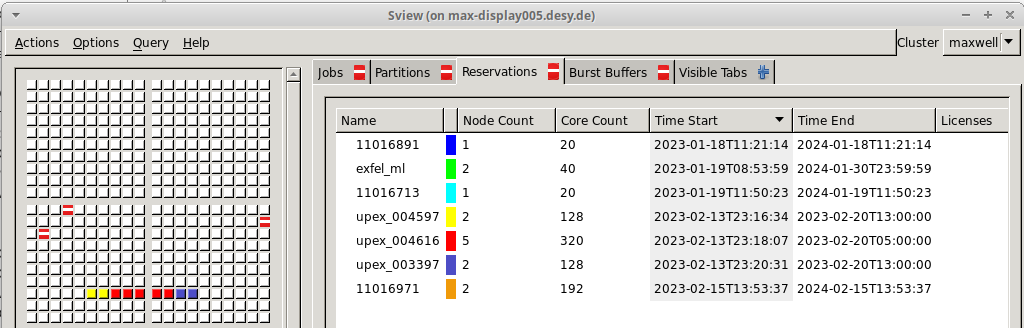

- doc/sview.png 0 additions, 0 deletionsdoc/sview.png

- doc/transient reflectivity.rst 9 additions, 0 deletionsdoc/transient reflectivity.rst

- knife_edge.py 0 additions, 83 deletionsknife_edge.py

- notebook_examples/XAS and XMCD energy shift investigation.ipynb 343 additions, 0 deletions...ok_examples/XAS and XMCD energy shift investigation.ipynb

- notebook_examples/tim-normalization.ipynb 437 additions, 0 deletionsnotebook_examples/tim-normalization.ipynb

- setup.py 40 additions, 0 deletionssetup.py

- src/toolbox_scs/__init__.py 22 additions, 0 deletionssrc/toolbox_scs/__init__.py

- src/toolbox_scs/base/__init__.py 5 additions, 0 deletionssrc/toolbox_scs/base/__init__.py

- src/toolbox_scs/base/knife_edge.py 167 additions, 0 deletionssrc/toolbox_scs/base/knife_edge.py

doc/load.rst

0 → 100644

doc/maintainers.rst

0 → 100644

doc/metadata.png

0 → 100644

136 KiB

doc/requirements.txt

0 → 100644

doc/scripts/bin_dssc_module_job.sh

0 → 100644

doc/scripts/boz_parameters_job.sh

0 → 100644

doc/scripts/format_data.py

0 → 100644

doc/scripts/format_data.sh

0 → 100644

doc/scripts/process_data_201007_23h.py

0 → 100644

doc/scripts/start_job_single.sh

0 → 100644

doc/scripts/start_processing_all.sh

0 → 100644

This diff is collapsed.

doc/sview.png

0 → 100644

47.1 KiB

doc/transient reflectivity.rst

0 → 100644

knife_edge.py

deleted

100644 → 0

This diff is collapsed.

This diff is collapsed.

notebook_examples/tim-normalization.ipynb

0 → 100644

This diff is collapsed.

setup.py

0 → 100644

This diff is collapsed.

src/toolbox_scs/__init__.py

0 → 100644

This diff is collapsed.

src/toolbox_scs/base/__init__.py

0 → 100644

src/toolbox_scs/base/knife_edge.py

0 → 100644

This diff is collapsed.