Commits on Source (41)

-

Giuseppe Mercurio authoredeee6b817

-

Giuseppe Mercurio authored

KB bending averager See merge request !119

128a4350 -

Giuseppe Mercurio authored0b75640d

-

Giuseppe Mercurio authored

Kb bending correct See merge request !120

dfbd3615 -

Giuseppe Mercurio authored96660d17

-

Giuseppe Mercurio authored

Mte3 See merge request !123

7763655c -

Giuseppe Mercurio authored05f8f9ce

-

Giuseppe Mercurio authored

sam-z-motor See merge request !124

1e336929 -

Giuseppe Mercurio authored98365cd4

-

Giuseppe Mercurio authored

sam-z-motor-pos See merge request !125

5a1c7557 -

Loïc Le Guyader authored6a1f33ea

-

Loïc Le Guyader authored

Fix for the BOZ analysis See merge request !126

38002f30 -

Loïc Le Guyader authored1872bed0

-

Loïc Le Guyader authored

Display roi rectangles in inspect_rois See merge request !127

9588b2df -

Loïc Le Guyader authored01a43eb8

-

Loïc Le Guyader authored

Fix flat field correction display in BOZ analysis See merge request !128

928e0f2c -

Laurent Mercadier authoredb7de9468

-

Laurent Mercadier authored

Updated PES documentation See merge request !129

d57ea8dd -

Laurent Mercadier authorededaaf493

-

Laurent Mercadier authored

Fix SA3 bunch pattern reading in get_pes_tof() See merge request !130

2758a4ac -

Loïc Le Guyader authoredd02553fb

-

Loïc Le Guyader authored

BOZ slurm update See merge request !131

340b60da -

Loïc Le Guyader authored

Change sum to mean such that horizontal and vertical t threshold above dark level should be the same

16f530f4 -

Loïc Le Guyader authored

Boz fix3 See merge request !132

88c305b1 -

Loïc Le Guyader authoredd965fd2c

-

Loïc Le Guyader authored

Update BOZ I notebook for 2.25 MHz data and 0.33 ph/bin dark figure See merge request !133

1472715b -

Loïc Le Guyader authored540f8ebd

-

Loïc Le Guyader authored

Fix histogram on data with no saturation value See merge request !134

1fe93611 -

Loïc Le Guyader authored82d17134

-

Loïc Le Guyader authored

Boz issue26 Closes #26 See merge request !135

3ac0cdea -

Loïc Le Guyader authored0126db83

-

Loïc Le Guyader authored

Boz saturation and rois threshold parametrized See merge request !136

f76d59cb -

Laurent Mercadier authoreda9bb93a8

-

Laurent Mercadier authoreda9c07d4b

-

Laurent Mercadier authoredb625028f

-

Laurent Mercadier authored

PES: Check the size of raw trace against pulse pattern See merge request !137

475f9003 -

Laurent Mercadier authored

Get pes fix See merge request !138

efdf89b2 -

Loïc Le Guyader authored41d3cc0e

-

Loïc Le Guyader authored

Add sat_level to the sbatch script with default at 500 See merge request !139

35f1fa8c -

Loïc Le Guyader authored8cb48609

-

Loïc Le Guyader authored

Setup documentation See merge request !140

a3bf8adf

Showing

- doc/BOZ analysis part I parameters determination.ipynb 73 additions, 97 deletionsdoc/BOZ analysis part I parameters determination.ipynb

- doc/BOZ analysis part II run processing.ipynb 4220 additions, 166 deletionsdoc/BOZ analysis part II run processing.ipynb

- doc/PES_spectra_extraction.ipynb 22 additions, 30 deletionsdoc/PES_spectra_extraction.ipynb

- doc/getting_started.rst 63 additions, 8 deletionsdoc/getting_started.rst

- doc/howtos.rst 37 additions, 5 deletionsdoc/howtos.rst

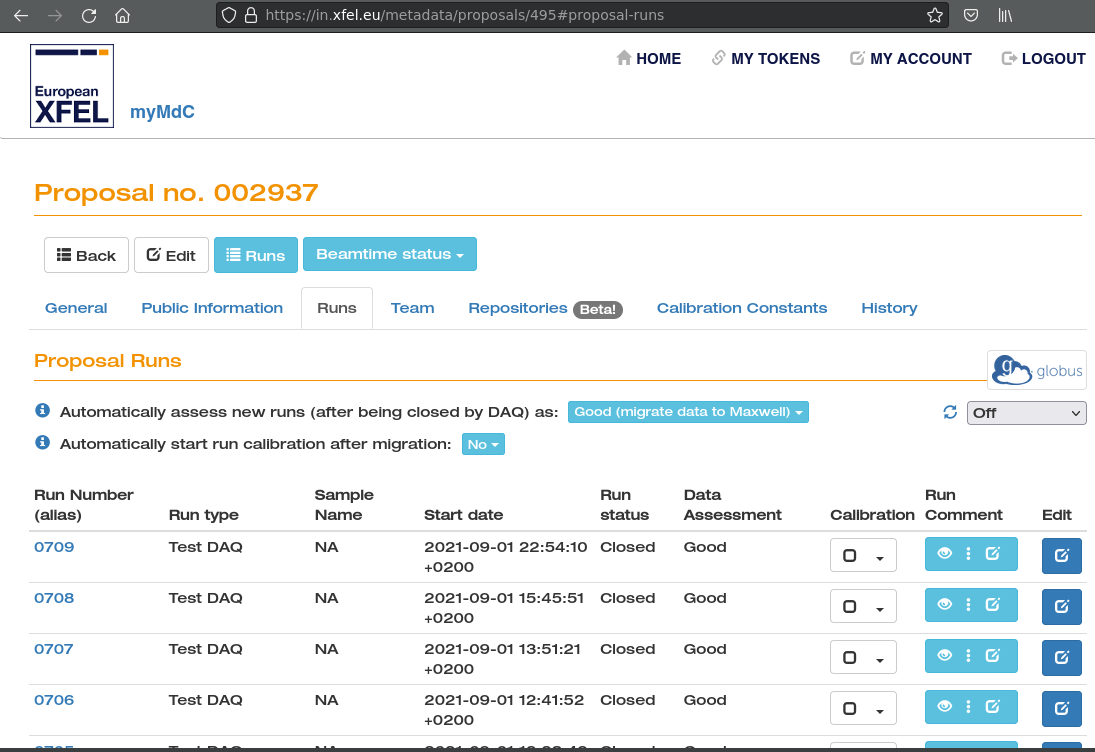

- doc/metadata.png 0 additions, 0 deletionsdoc/metadata.png

- scripts/boz_parameters_job.sh 24 additions, 0 deletionsscripts/boz_parameters_job.sh

- setup.py 2 additions, 2 deletionssetup.py

- src/toolbox_scs/constants.py 22 additions, 6 deletionssrc/toolbox_scs/constants.py

- src/toolbox_scs/detectors/pes.py 28 additions, 12 deletionssrc/toolbox_scs/detectors/pes.py

- src/toolbox_scs/routines/boz.py 153 additions, 102 deletionssrc/toolbox_scs/routines/boz.py

This diff is collapsed.

This diff is collapsed.

doc/metadata.png

0 → 100644

136 KiB

scripts/boz_parameters_job.sh

0 → 100644